TAPAS Dataset

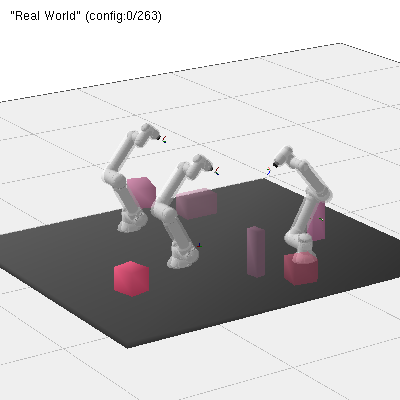

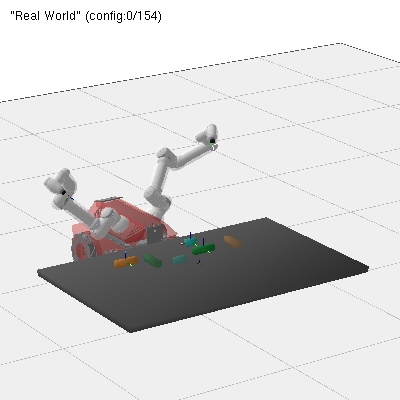

The TAPAS dataset consists of 204k task and motion plans on 7'000 different randomized scenarios containing up to 4 robot arms. The goal in all the scenes is to move the objects to their corresponding goals using (possible collaborative) pick and place.

Scenarios

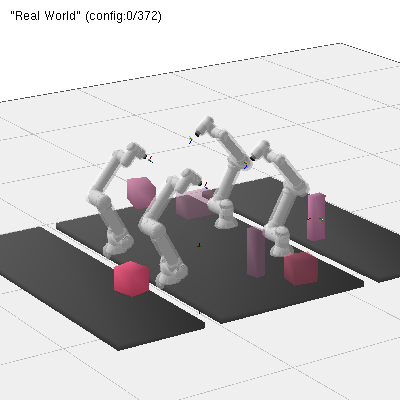

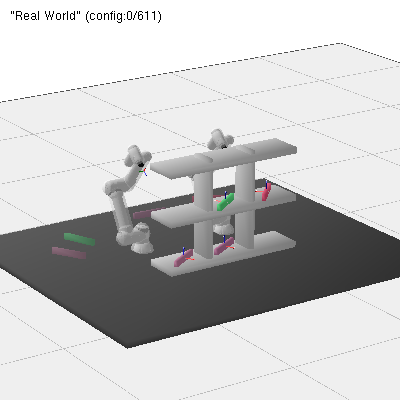

We currently have 4 base scenes (from left to right, illustrated above): random, with up to 4 arms with random base orientation and pose, husky, with two arms on a husky base, conveyor, a conveyor-like setting with 4 arms where objects have to be moved from the middle to the outside, and shelf a setting with a shelf and 2 arms.

Randomization

We randomize the size of the objects and the start and the goal pose of the objects differently for each scene:

- Random: We randomize the position uniformly on the table while making sure that they do not collide with each other in the home-pose. The orientation is sampled uniformly from 0 - 360 deg. The objects are similarly sampled uniformly on the table.

- Husky: For the husky, we sample start positions and goal positions randomly.

- Conveyor: For the conveyor setting, we are inspired by sorting of objects, leading to all start positions being in the middle, and all goal poses being on the outside tables.

- Shelf: For the shelf scenario, we sample the start poses in the shelf, and the goal poses on the table.

Contents

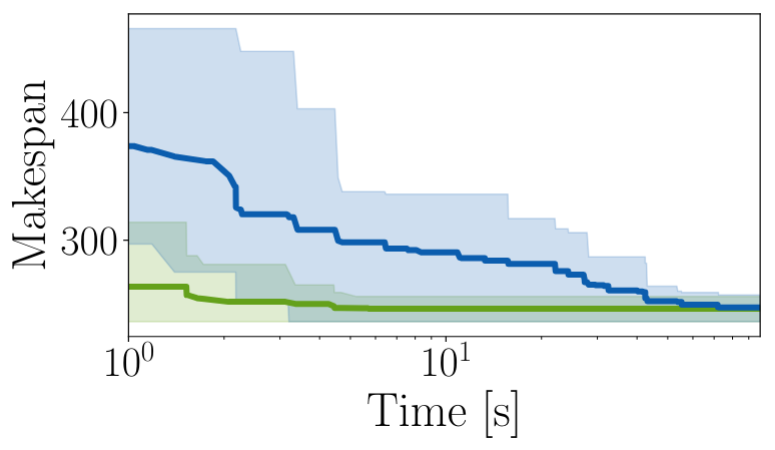

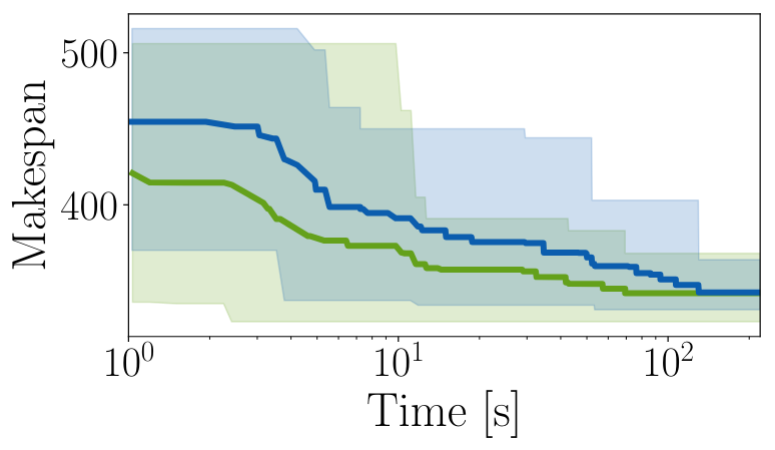

For each scene, we generate (multiple) possible task sequences for the robots, and generate full motion plans from the sequence using our multi agent task and motion planner (described in the paper). Each task and motion plan contains:

- The trajectory for each robot, with each step containing the joint pose of the robot, the end effector pose, and the symbolic state.

- The plan, with start and end times of an action for each robot.

- The sequence from which the plan was generated.

- A scene file, describing all the objects in the scene.

- A metadata file, with the makespan of the plan, and the number of robots, and objects in the scene.